The Wisdom of Public Prediction Markets And The Limits Of Statistics

Posted by Big Gav in black swan, kevin kelly, prediction, predictive markets, statistics

Kevin Kelly has an interesting (and thorough) look at the state of play in predictive markets at the Long Now blog - The Wisdom of Public Prediction Markets.

Prediction markets continue to proliferate. These communities use money to bet on outcomes in the future. If a prediction comes true, the winners reap the money from the losing betters. The price of a bet, or share, fluctuates over time — and thus can be used as a signal for the community’s opinion. In theory a prediction market taps into the “wisdom of crowds,” but can also be viewed as conventional wisdom. However the results of prediction markets have been proven to be reliable conventional wisdom. (See my previous post on the subject.)

There are two kinds of prediction markets: ones where you bet real money, and ones where you bet funny money. Since betting real money keeps people honest (to reduce their loses), markets with real money are considered a much better indicator of opinion than a mere poll — which has no “penalty” for being less than honest. But real money prediction markets are (stupidly) illegal in the US. So token markets like Long Bets and Bet2Give are devised to innovate around the law.

For instance, Hubdub trades token dollars. You are given $1,000 hubdubs at the start, and $20 each day you log on. You win or loose these token dollars on various predictions. There is a leaderboard which displays the highest ranked traders, showing how much they have gained in the last quarter. One fellow gained $1 million hubdubs, and now has a net worth of $3 million. Hubdub dollars are only good for bragging rights.

One clarification of how the price of a bet works (from Hubdub’s FAQ):If a prediction has a yes value of 43%, does that mean that 43% of people have voted yes?

No, not really. The forecast is dependent on both the number of people who have selected this outcome and the amount they have risked on it. Very roughly, 43% means that 43% of the money risked by users is riding on that outcome.

I was curious how closely the two formats (real and token money) might match each other so I hunted for a bet that I thought most prediction markets might share: the outcome of the US presidential election. From my brief survey, betting real dollars and token dollars give similar results. More so, there is a pretty close convergence of price among all the prediction markets ...

My conclusion is that token money prediction markets carry the same validity as real money prediction markets, and that they are fairly consistent across markets. In that sense they are probably reliable indicators of what people believe at this moment (not be confused with reliable predictions).

Prediction markets aren't a new idea of course - John Brunner made them a central feature of The Shockwave Rider and pointed out that if they become ubiquitous they are just as likely to become a tool for manipulating public perception as they are to be a useful way of gauging it (and perhaps predicting future events). They key to making them successful is absolute transparency - all market participants need to be sure that they data they see is accurate (and how everyone else "voted").

Predictive markets are the sort of thing the folks at The Arlington Institute like to mess about with, though their latest scheme is a little more metaphysical in nature (and highly reminiscent of the opening sections of "The Men Who Stare At Goats") - TAI Alert 15 - Impending Event Alert.

Here at the Arlington Institute, we have worked with real precognizant dreamers who have had experience with intelligence services and we have subsequently learned about the hundreds of case studies of individuals who had explicit dreams about the 9/11 affair (people jumping out of burning high rise buildings, etc.), beginning some six months before the event. We have been intrigued with the notion that the human collective unconscious somehow anticipates large impending perturbations. Our WHETHEReport project, for which we are looking for funding, is in fact based upon this dynamic. In telling people about this project I have received strong confirmations of the efficacy of the underlying logic from many individuals around the world.

Well, in the last two days I have received four independent, explicit indications from far removed friends suggesting that something very substantial and disruptive is going to happen to the U.S. within the next 60 days or so. If these warnings manifest themselves in an event of the significance of something like 9/11 then people all over the world should begin to experience dreams and other intuitions suggesting that something extraordinary is about to happen.

So I’m asking you to participate in an experiment with us. If you, or someone you know, experiences any kind of significant suggestion (dream, intuition, etc.) that something big and disruptive is about to happen in the coming weeks, send us a note and tell us about it. We’ll compile them all and see if we can find any patterns or pointers toward an actual future event. Just cut and paste the form below into an email and fill it out and send it in. I’ll let you know what happens. If you have multiple experiences, please send them along too.

You don’t need to include any identification and we’ll certainly keep all of this information confidential.

Besides the "Desperately Seeking Psychics" ad above, TAI also issued another alert recently, this one noting the financial system seems to be on the verge of meltdown (something David Martin predicted a while back, to be fair) - TAI Alert: 16 - The Next Step in the Unwinding of the Economy.

Nassim Nicholas Taleb (of "Black Swan" fame) is a man who has scant respect for any sort of prediction-merchant, be they individuals or markets assessing the wisdom of the masses. His latest essay is up at the EDGE - THE FOURTH QUADRANT: A MAP OF THE LIMITS OF STATISTICS.

Statistical and applied probabilistic knowledge is the core of knowledge; statistics is what tells you if something is true, false, or merely anecdotal; it is the "logic of science"; it is the instrument of risk-taking; it is the applied tools of epistemology; you can't be a modern intellectual and not think probabilistically—but... let's not be suckers. The problem is much more complicated than it seems to the casual, mechanistic user who picked it up in graduate school. Statistics can fool you. In fact it is fooling your government right now. It can even bankrupt the system (let's face it: use of probabilistic methods for the estimation of risks did just blow up the banking system).

The current subprime crisis has been doing wonders for the reception of any ideas about probability-driven claims in science, particularly in social science, economics, and "econometrics" (quantitative economics). Clearly, with current International Monetary Fund estimates of the costs of the 2007-2008 subprime crisis, the banking system seems to have lost more on risk taking (from the failures of quantitative risk management) than every penny banks ever earned taking risks. But it was easy to see from the past that the pilot did not have the qualifications to fly the plane and was using the wrong navigation tools: The same happened in 1983 with money center banks losing cumulatively every penny ever made, and in 1991-1992 when the Savings and Loans industry became history.

It appears that financial institutions earn money on transactions (say fees on your mother-in-law's checking account) and lose everything taking risks they don't understand. I want this to stop, and stop now— the current patching by the banking establishment worldwide is akin to using the same doctor to cure the patient when the doctor has a track record of systematically killing them. And this is not limited to banking—I generalize to an entire class of random variables that do not have the structure we thing they have, in which we can be suckers.

And we are beyond suckers: not only, for socio-economic and other nonlinear, complicated variables, we are riding in a bus driven a blindfolded driver, but we refuse to acknowledge it in spite of the evidence, which to me is a pathological problem with academia. After 1998, when a "Nobel-crowned" collection of people (and the crème de la crème of the financial economics establishment) blew up Long Term Capital Management, a hedge fund, because the "scientific" methods they used misestimated the role of the rare event, such methodologies and such claims on understanding risks of rare events should have been discredited. Yet the Fed helped their bailout and exposure to rare events (and model error) patently increased exponentially (as we can see from banks' swelling portfolios of derivatives that we do not understand).

Are we using models of uncertainty to produce certainties?

This masquerade does not seem to come from statisticians—but from the commoditized, "me-too" users of the products. Professional statisticians can be remarkably introspective and self-critical. Recently, the American Statistical Association had a special panel session on the "black swan" concept at the annual Joint Statistical Meeting in Denver last August. They insistently made a distinction between the "statisticians" (those who deal with the subject itself and design the tools and methods) and those in other fields who pick up statistical tools from textbooks without really understanding them. For them it is a problem with statistical education and half-baked expertise. Alas, this category of blind users includes regulators and risk managers, whom I accuse of creating more risk than they reduce.

So the good news is that we can identify where the danger zone is located, which I call "the fourth quadrant", and show it on a map with more or less clear boundaries. A map is a useful thing because you know where you are safe and where your knowledge is questionable. So I drew for the Edge readers a tableau showing the boundaries where statistics works well and where it is questionable or unreliable. Now once you identify where the danger zone is, where your knowledge is no longer valid, you can easily make some policy rules: how to conduct yourself in that fourth quadrant; what to avoid.

So the principal value of the map is that it allows for policy making. Indeed, I am moving on: my new project is about methods on how to domesticate the unknown, exploit randomness, figure out how to live in a world we don't understand very well. While most human thought (particularly since the enlightenment) has focused us on how to turn knowledge into decisions, my new mission is to build methods to turn lack of information, lack of understanding, and lack of "knowledge" into decisions—how, as we will see, not to be a "turkey". ...

I start with my old crusade against "quants" (people like me who do mathematical work in finance), economists, and bank risk managers, my prime perpetrators of iatrogenic risks (the healer killing the patient). Why iatrogenic risks? Because, not only have economists been unable to prove that their models work, but no one managed to prove that the use of a model that does not work is neutral, that it does not increase blind risk taking, hence the accumulation of hidden risks.

Figure 1 My classical metaphor: A Turkey is fed for a 1000 days—every days confirms to its statistical department that the human race cares about its welfare "with increased statistical significance". On the 1001st day, the turkey has a surprise.

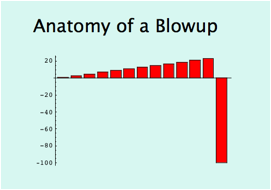

Figure 2 The graph above shows the fate of close to 1000 financial institutions (includes busts such as FNMA, Bear Stearns, Northern Rock, Lehman Brothers, etc.). The banking system (betting AGAINST rare events) just lost > 1 Trillion dollars (so far) on a single error, more than was ever earned in the history of banking. Yet bankers kept their previous bonuses and it looks like citizens have to foot the bills. And one Professor Ben Bernanke pronounced right before the blowup that we live in an era of stability and "great moderation" (he is now piloting a plane and we all are passengers on it).

Figures 1 and 2 show you the classical problem of the turkey making statements on the risks based on past history (mixed with some theorizing that happens to narrate well with the data). A friend of mine was sold a package of subprime loans (leveraged) on grounds that "30 years of history show that the trade is safe." He found the argument unassailable "empirically". And the unusual dominance of the rare event shown in Figure 3 is not unique: it affects all macroeconomic data—if you look long enough almost all the contribution in some classes of variables will come from rare events (I looked in the appendix at 98% of trade-weighted data).

Now let me tell you what worries me. Imagine that the Turkey can be the most powerful man in world economics, managing our economic fates. How? A then-Princeton economist called Ben Bernanke made a pronouncement in late 2004 about the "new moderation" in economic life: the world getting more and more stable—before becoming the Chairman of the Federal Reserve. Yet the system was getting riskier and riskier as we were turkey-style sitting on more and more barrels of dynamite—and Prof. Bernanke's predecessor the former Federal Reserve Chairman Alan Greenspan was systematically increasing the hidden risks in the system, making us all more vulnerable to blowups.

By the "narrative fallacy" the turkey economics department will always manage to state, before thanksgivings that "we are in a new era of safety", and back-it up with thorough and "rigorous" analysis. And Professor Bernanke indeed found plenty of economic explanations—what I call the narrative fallacy—with graphs, jargon, curves, the kind of facade-of-knowledge that you find in economics textbooks. (This is the find of glib, snake-oil facade of knowledge—even more dangerous because of the mathematics—that made me, before accepting the new position in NYU's engineering department, verify that there was not a single economist in the building. I have nothing against economists: you should let them entertain each others with their theories and elegant mathematics, and help keep college students inside buildings. But beware: they can be plain wrong, yet frame things in a way to make you feel stupid arguing with them. So make sure you do not give any of them risk-management responsibilities.) ...

Many researchers, such as Philip Tetlock, have looked into the incapacity of social scientists in forecasting (economists, political scientists). It is thus evident that while the forecasters might be just "empty suits", the forecast errors are dominated by rare events, and we are limited in our ability to track them. The "wisdom of crowds" might work in the first three quadrant; but it certainly fails (and has failed) in the fourth.

Living In The Fourth Quadrant

Beware the Charlatan. When I was a quant-trader in complex derivatives, people mistaking my profession used to ask me for "stock tips" which put me in a state of rage: a charlatan is someone likely (statistically) to give you positive advice, of the "how to" variety.

Go to a bookstore, and look at the business shelves: you will find plenty of books telling you how to make your first million, or your first quarter-billion, etc. You will not be likely to find a book on "how I failed in business and in life"—though the second type of advice is vastly more informational, and typically less charlatanic. Indeed, the only popular such finance book I found that was not quacky in nature—on how someone lost his fortune—was both self-published and out of print. Even in academia, there is little room for promotion by publishing negative results—though these, are vastly informational and less marred with statistical biases of the kind we call data snooping. So all I am saying is "what is it that we don't know", and my advice is what to avoid, no more.

You can live longer if you avoid death, get better if you avoid bankruptcy, and become prosperous if you avoid blowups in the fourth quadrant.

Now you would think that people would buy my arguments about lack of knowledge and accept unpredictability. But many kept asking me "now that you say that our measures are wrong, do you have anything better?"

I used to give the same mathematical finance lectures for both graduate students and practitioners before giving up on academic students and grade-seekers. Students cannot understand the value of "this is what we don't know"—they think it is not information, that they are learning nothing. Practitioners on the other hand value it immensely. Likewise with statisticians: I never had a disagreement with statisticians (who build the field)—only with users of statistical methods.

Spyros Makridakis and I are editors of a special issue of a decision science journal, The International Journal of Forecasting. The issue is about "What to do in an environment of low predictability". We received tons of papers, but guess what? Very few addressed the point: they mostly focused on showing us that they predict better (on paper). This convinced me to engage in my new project: "how to live in a world we don't understand".

So for now I can produce phronetic rules (in the Aristotelian sense of phronesis, decision-making wisdom). Here are a few, to conclude.

Phronetic Rules: What Is Wise To Do (Or Not Do) In The Fourth Quadrant

1) Avoid Optimization, Learn to Love Redundancy. Psychologists tell us that getting rich does not bring happiness—if you spend it. But if you hide it under the mattress, you are less vulnerable to a black swan. Only fools (such as Banks) optimize, not realizing that a simple model error can blow through their capital (as it just did). In one day in August 2007, Goldman Sachs experienced 24 x the average daily transaction volume—would 29 times have blown up the system? The only weak point I know of financial markets is their ability to drive people & companies to "efficiency" (to please a stock analyst’s earnings target) against risks of extreme events.

Indeed some systems tend to optimize—therefore become more fragile. Electricity grids for example optimize to the point of not coping with unexpected surges—Albert-Lazlo Barabasi warned us of the possibility of a NYC blackout like the one we had in August 2003. Quite prophetic, the fellow. Yet energy supply kept getting more and more efficient since. Commodity prices can double on a short burst in demand (oil, copper, wheat) —we no longer have any slack. Almost everyone who talks about "flat earth" does not realize that it is overoptimized to the point of maximal vulnerability.

Biological systems—those that survived millions of years—include huge redundancies. Just consider why we like sexual encounters (so redundant to do it so often!). Historically populations tended to produced around 4-12 children to get to the historical average of ~2 survivors to adulthood.

Option-theoretic analysis: redundancy is like long an option. You certainly pay for it, but it may be necessary for survival.

2) Avoid prediction of remote payoffs—though not necessarily ordinary ones. Payoffs from remote parts of the distribution are more difficult to predict than closer parts.

A general principle is that, while in the first three quadrants you can use the best model you can find, this is dangerous in the fourth quadrant: no model should be better than just any model.

3) Beware the "atypicality" of remote events. There is a sucker's method called "scenario analysis" and "stress testing"—usually based on the past (or some "make sense" theory). Yet I show in the appendix how past shortfalls that do not predict subsequent shortfalls. Likewise, "prediction markets" are for fools. They might work for a binary election, but not in the Fourth Quadrant. Recall the very definition of events is complicated: success might mean one million in the bank ...or five billions!

4) Time. It takes much, much longer for a times series in the Fourth Quadrant to reveal its property. At the worst, we don't know how long. Yet compensation for bank executives is done on a short term window, causing a mismatch between observation window and necessary window. They get rich in spite of negative returns. But we can have a pretty clear idea if the "Black Swan" can hit on the left (losses) or on the right (profits).

The point can be used in climatic analysis. Things that have worked for a long time are preferable—they are more likely to have reached their ergodic states.

5) Beware Moral Hazard. Is optimal to make series of bonuses betting on hidden risks in the Fourth Quadrant, then blow up and write a thank you letter. Fannie Mae and Freddie Mac's Chairmen will in all likelihood keep their previous bonuses (as in all previous cases) and even get close to 15 million of severance pay each. ...

And finally, as the complete looting of the US Treasury by the Republican cabal becomes obvious to all, Bob Morris is echoing Kunstler's call to rename the thieves - The party that wrecked America.

It’s time to end the looting of this country by a tiny fanatical elite who use their extremist political philosophy to justify greed and plundering. Look, lots of them belong in prison. Once we get Obama, a centrist adult, in the White House then perhaps we can start to clean up the mess these thieves have left us. But none of that will happen with McCain in the White House as the thievery will simply continue.